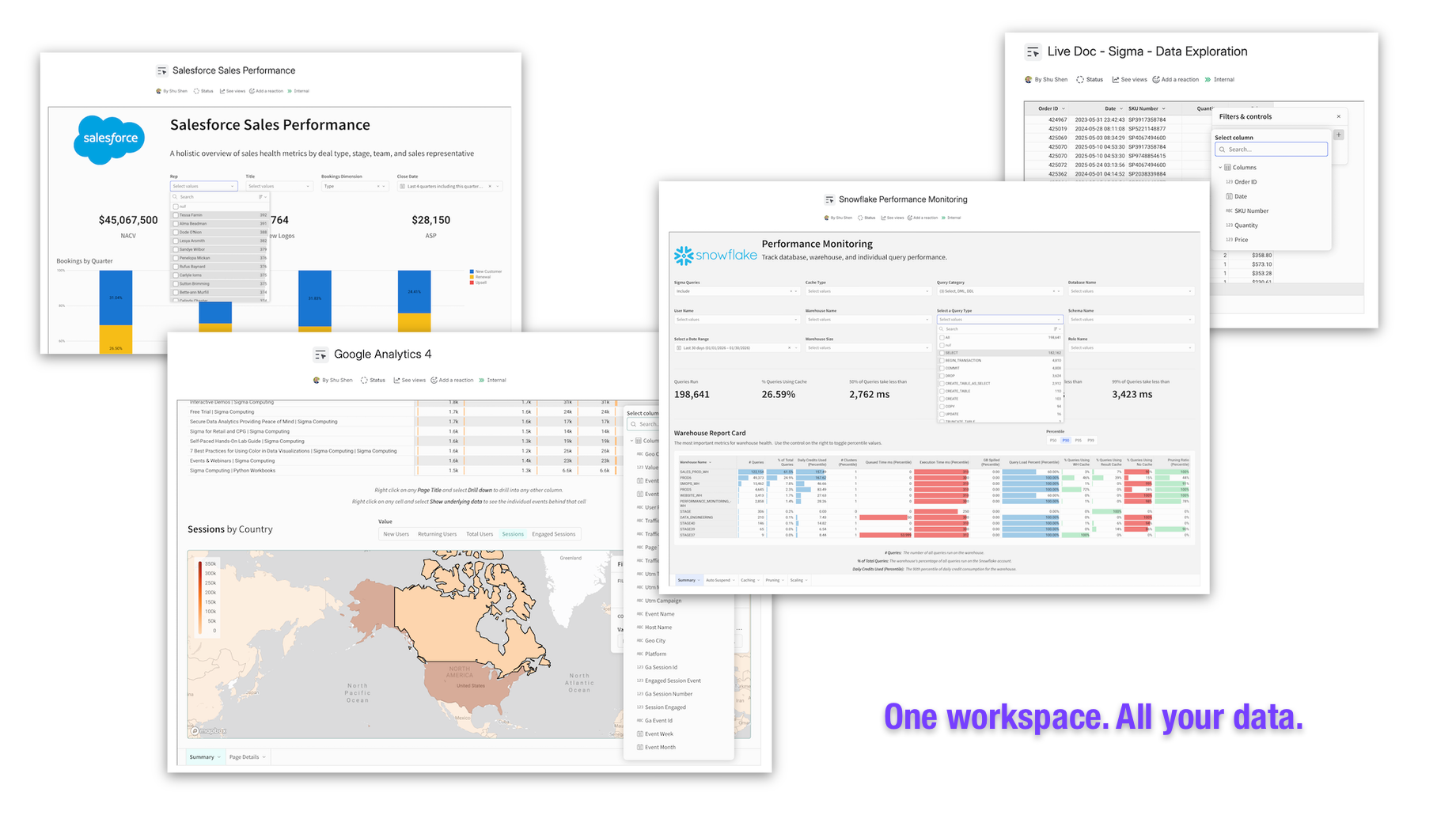

One Workspace. All Your Data.

Bring your critical business data directly into Confluence. Our proven connectors eliminate context switching and create a single source of truth for your team:

- Amazon QuickSight

- Databricks

- Datadog

- Kibana Cloud

- Sigma Computing Embedded Analytics

All available on the Atlassian Marketplace with thousands of satisfied users worldwide.

Atlassian Forge App Development Services

Our Marketplace apps are built on Forge, and we bring that same expertise to custom engagements. When off-the-shelf Marketplace apps do not fit, we build custom Forge apps that extend Jira and Confluence with the right UI, the right integrations, and the right security model for your team.

Security-First Delivery

Forge is secure by design - with Atlassian-hosted compute and storage, built-in authentication, tenancy isolation, and controls over how and when data leaves Atlassian Cloud. We design, build, and ship with a security-first approach.

In-Context UX

Turn extensibility into better day-to-day UX. We design in-context Jira and Confluence experiences that surface the right information and actions when they are needed, so teams can solve real business pain points without leaving their workflow.

SaaS Integrations

Connect Jira and Confluence to your existing systems. We build integrations that bring external data into context - without brittle scripts or copy-paste. From BI and observability to CRM and internal services, we design data flows and experiences so teams can act on the latest information where work happens.